Technical Report 313, c4e-Preprint Series, Cambridge

Leveraging text-to-text pre-trained language models for question answering in chemistry

Reference: Technical Report 313, c4e-Preprint Series, Cambridge, 2023

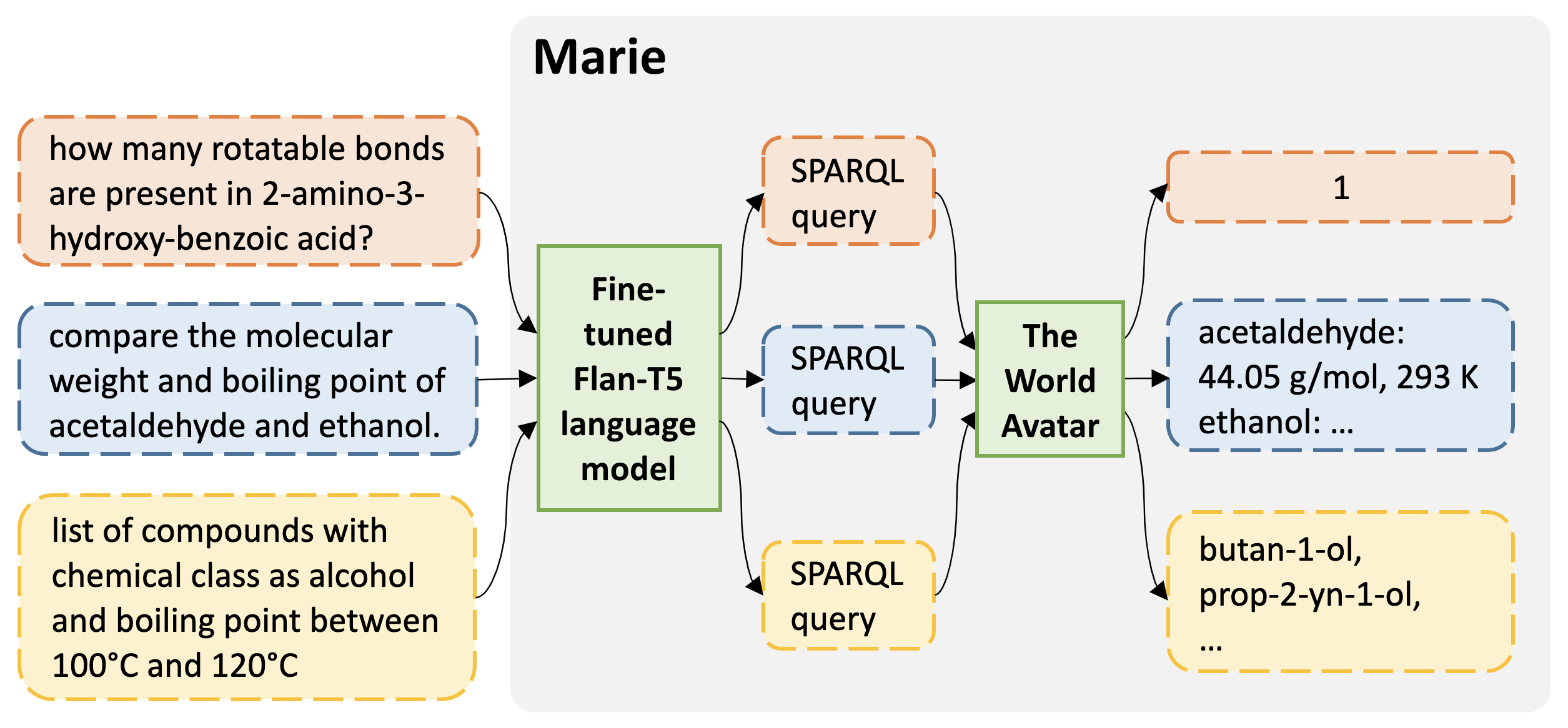

- A QA system for chemistry that leverages pre-trained language models to translate natural language question in SPARQL queries.

- The QA system can resolve complex queries that involve many relation hops.

- The QA system boasts high accuracy and the flexibility to adapt to changes and evolution in the knowledge graph without necessitating retraining.

In this study, we present a question answering (QA) system for chemistry, named Marie, with the use of a text-to-text pre-trained language model to attain accurate data retrieval. The underlying data store is "The World Avatar" (TWA) a general world model consisting of a knowledge graph (KG) that evolves over time. TWA includes information about chemical species such as their chemical and physical properties, applications, and chemical classifications. Building upon our previous work on KGQA for chemistry, this advanced version of Marie leverages a fine-tuned Flan-T5 model to seamlessly translate natural language questions into SPARQL queries, with no separate components for entity and relation linking. The developed QA system demonstrates competence in providing accurate results for complex queries that involve many relation hops, as well as showcasing the ability to balance correctness and speed for real-world usage. This new approach offers significant advantages over the prior implementation that relied on knowledge graph embedding. Specifically, the updated system boasts high accuracy and great flexibility in accommodating changes and evolution of the data stored in the knowledge graph without necessitating retraining. Our evaluation results underscore the efficacy of the improved system, highlighting its superior accuracy and the ability in answering complex questions compared to its predecessor.

In this study, we present a question answering (QA) system for chemistry, named Marie, with the use of a text-to-text pre-trained language model to attain accurate data retrieval. The underlying data store is "The World Avatar" (TWA) a general world model consisting of a knowledge graph (KG) that evolves over time. TWA includes information about chemical species such as their chemical and physical properties, applications, and chemical classifications. Building upon our previous work on KGQA for chemistry, this advanced version of Marie leverages a fine-tuned Flan-T5 model to seamlessly translate natural language questions into SPARQL queries, with no separate components for entity and relation linking. The developed QA system demonstrates competence in providing accurate results for complex queries that involve many relation hops, as well as showcasing the ability to balance correctness and speed for real-world usage. This new approach offers significant advantages over the prior implementation that relied on knowledge graph embedding. Specifically, the updated system boasts high accuracy and great flexibility in accommodating changes and evolution of the data stored in the knowledge graph without necessitating retraining. Our evaluation results underscore the efficacy of the improved system, highlighting its superior accuracy and the ability in answering complex questions compared to its predecessor.

Material from this preprint has been published in ACS Omega.

PDF (4.7 MB)