Technical Report 268, c4e-Preprint Series, Cambridge

Predicting power conversion efficiency of organic photovoltaics: models and data analysis

Reference: Technical Report 268, c4e-Preprint Series, Cambridge, 2021

- Neural and baseline models assessed for predicting PCE of organic photovoltaics.

- Computational (CEPDB) and experimental (HOPV15) literature datasets considered.

- The CEPDB fitted well by all models, but predicted PCEs disagree with experiments.

- The HOPV15 fitted poorly, with the baseline models being better than the neural models.

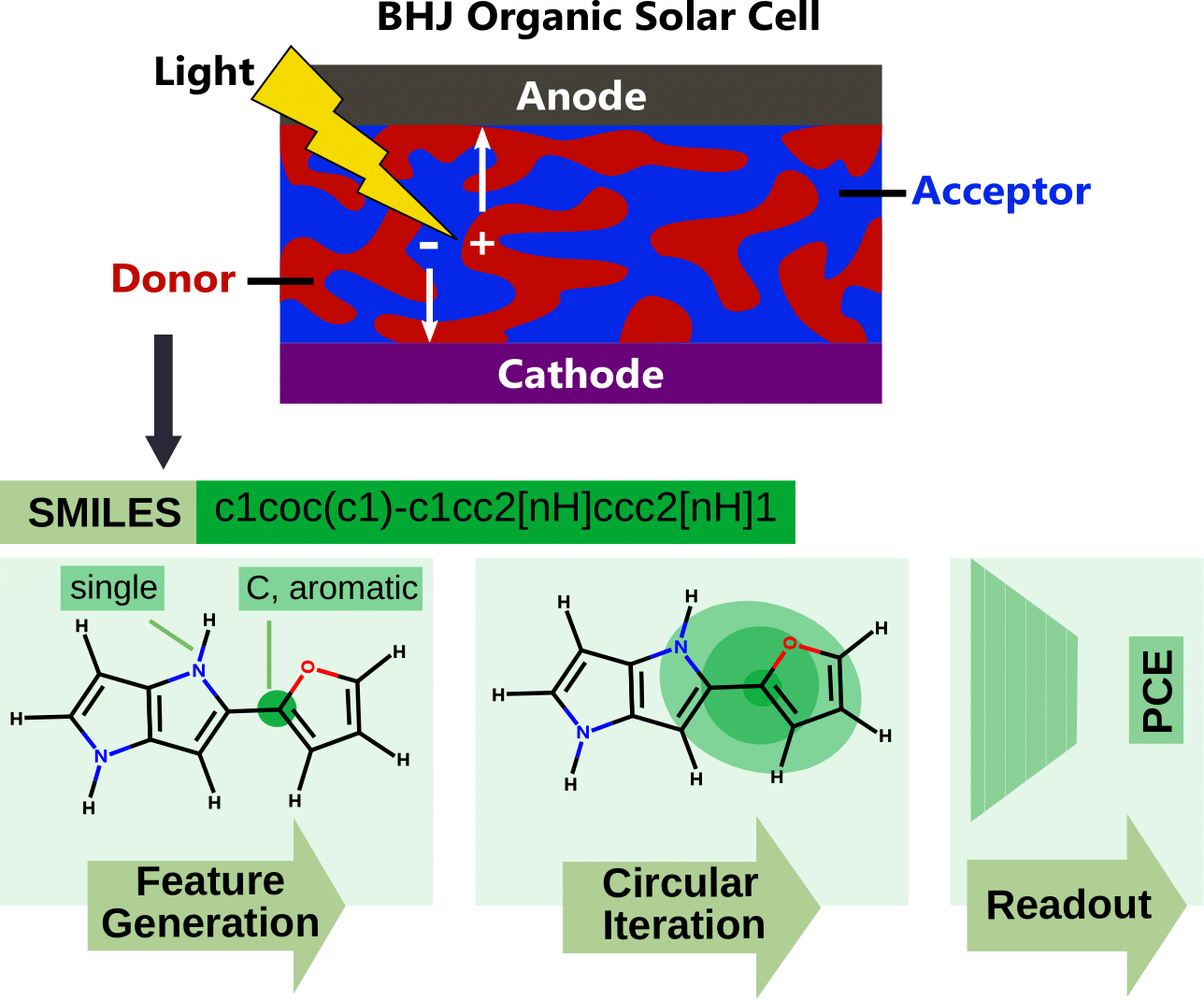

In this paper, the ability of three selected machine learning neural and baseline models in predicting the power conversion efficiency (PCE) of organic photovoltaics (OPVs) using molecular structure information as an input is assessed. The bi-directional long short-term memory (gFSI/BiLSTM), attentive fingerprints (Attentive FP), and simple graph (Simple GNN) neural networks as well as baseline support vector regression (SVR), random forests (RF), and high dimensional model representation (HDMR) methods are trained to both the large and computational Harvard clean energy project database (CEPDB) and the much smaller experimental Harvard organic photovoltaic 15 dataset (HOPV15). It was found that the neural-based models generally performed better on the computational dataset with the Attentive FP model reaching a state of the art performance with the test set mean squared error of 0.071. The experimental dataset proved much harder to fit, with all the models exhibiting a rather poor performance. Contrary to the computational dataset, the baseline models were found to perform better than the neural models. To improve the ability of machine learning models to predict PCEs for OPVs, either better computational results that correlate well with experiments or more experimental data at well-controlled conditions are likely required.

In this paper, the ability of three selected machine learning neural and baseline models in predicting the power conversion efficiency (PCE) of organic photovoltaics (OPVs) using molecular structure information as an input is assessed. The bi-directional long short-term memory (gFSI/BiLSTM), attentive fingerprints (Attentive FP), and simple graph (Simple GNN) neural networks as well as baseline support vector regression (SVR), random forests (RF), and high dimensional model representation (HDMR) methods are trained to both the large and computational Harvard clean energy project database (CEPDB) and the much smaller experimental Harvard organic photovoltaic 15 dataset (HOPV15). It was found that the neural-based models generally performed better on the computational dataset with the Attentive FP model reaching a state of the art performance with the test set mean squared error of 0.071. The experimental dataset proved much harder to fit, with all the models exhibiting a rather poor performance. Contrary to the computational dataset, the baseline models were found to perform better than the neural models. To improve the ability of machine learning models to predict PCEs for OPVs, either better computational results that correlate well with experiments or more experimental data at well-controlled conditions are likely required.

Material from this preprint has been published in ACS Omega.

PDF (8.3 MB)